What's That Noise?! [Ian Kallen's Weblog]

Saturday April 30, 2011

Saturday April 30, 2011

The mobile web, bookmarks and the apps they should be able to play with

Last year Marco Arment of Instapaper and Tumblr fame posted a plea to Apple with an open enhancement request to the Mobile Safari team for sane bookmarklet installation or alternatives. In it he detailed how mobile browsers such as mobile Safari on iPhones and iPads are second class citizens on the web. You can't build plug-ins for them or easily add bookmarklets (javascript you can invoke from the browser's bookmarks). Instapaper on iOS devices would greatly benefit from easier integration into with the device's browser. I went through the bookmarklet copy/paste exercise that Instapaper walks you through, it's definitely a world of pain. Well, I read this morning that it looks like Apple is answering him, perhaps. Maybe backhanding him.

Last year Marco Arment of Instapaper and Tumblr fame posted a plea to Apple with an open enhancement request to the Mobile Safari team for sane bookmarklet installation or alternatives. In it he detailed how mobile browsers such as mobile Safari on iPhones and iPads are second class citizens on the web. You can't build plug-ins for them or easily add bookmarklets (javascript you can invoke from the browser's bookmarks). Instapaper on iOS devices would greatly benefit from easier integration into with the device's browser. I went through the bookmarklet copy/paste exercise that Instapaper walks you through, it's definitely a world of pain. Well, I read this morning that it looks like Apple is answering him, perhaps. Maybe backhanding him.

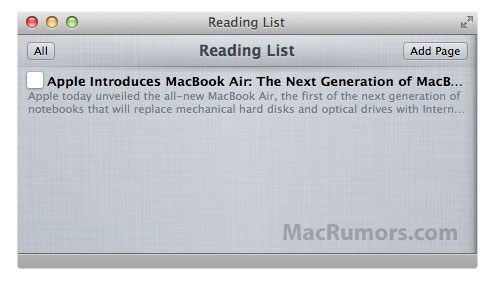

Apple is apparently implementing their own "Reading List" feature that current speculation says is squarely aimed at Instapaper and Read It Later. This came out of a MacRumors report on early builds of Lion, the next version of Mac OS X. VentureBeat rightly supposes there will be complimentary features on iOS devices.

Like Marco, I'd like to be able to build apps that run natively on devices that can play more smoothly with the device's browser. While mobile SDKs may have support for running an embedded browser (AKA "web views") they're still crippled by having the main browser in it's own silo (and has been previously circulated, web view javascript engines are crippled in their own). Ideally what we're seeing isn't an assault on existing bookmark services but the harbinger of API's that will allow developers to integrate more of the browser's functionality. Certainly some things should continue to be closely sandboxed (e.g. browsing history visibility) or requires full disclosure and permission (e.g. access to the cookie jar). Privacy leaks, phishing attacks and other sorts of miscreant behavior should obviously be guarded against but bookmark additions seem like an easy thing to provide an API and safe user experience around.

As Delicious (and before them, Backflip RIP and good riddance) showed, bookmarks are significant social objects. Bookmarks are also a significant personal utility, much like an address book or calendar. I've often considered building an Instapaper or Read It Later type of app, I can't count the number of times I've found something I've wanted to read but didn't have time to read it that instance. Numerous efforts to build interesting things with bookmarks have risen and fallen (ma.gnolia.com) or stalled (sigh... xmarks, simpy). I'm convinced that the emergence of the mobile web and the native app ecosystems that play with them will create an abundance of new opportunities. But developers need to be enabled. Apple, please give us API's, not more silos!

( Apr 30 2011, 09:22:48 AM PDT ) Permalink

Thursday January 20, 2011

Thursday January 20, 2011

KUSF: A village on the airwaves burned down

As some readers may know, I founded Rampage Radio with the guidance and support of Howie Klein back in 1982. I only stuck around for a few years and thereafter left it in Ron Quintana's able hands. But those were years with impact, I look back at them fondly and the show has been running on the air ever since, the last broadcast was in it's usual time slot last Saturday night. As someone who grew up in San Francisco, I always felt that KUSF's presence at 90.3 was a comforting constant. Apparently a deal to sell off KUSF's frequency was consummated last week and the signal was abruptly shutoff Tuesday morning. A rally and a dialog took place last night at Presentation Theater with USF President Father Stephen Privett. I commend Father Privett for coming out to face the music, all 500 or so of us in the packed theater were upset by these events and I think it took a degree of courage to show up. However, after the two hour question and answer sessions, it became clear to me that Father Privett has suffered a third degree failure.

As some readers may know, I founded Rampage Radio with the guidance and support of Howie Klein back in 1982. I only stuck around for a few years and thereafter left it in Ron Quintana's able hands. But those were years with impact, I look back at them fondly and the show has been running on the air ever since, the last broadcast was in it's usual time slot last Saturday night. As someone who grew up in San Francisco, I always felt that KUSF's presence at 90.3 was a comforting constant. Apparently a deal to sell off KUSF's frequency was consummated last week and the signal was abruptly shutoff Tuesday morning. A rally and a dialog took place last night at Presentation Theater with USF President Father Stephen Privett. I commend Father Privett for coming out to face the music, all 500 or so of us in the packed theater were upset by these events and I think it took a degree of courage to show up. However, after the two hour question and answer sessions, it became clear to me that Father Privett has suffered a third degree failure.

First, the outcome was poor; the students who he claimed to be acting on behalf of will have reduced volunteer support, the revenue (purported to benefit students) wasn't subject to a competitive bid (it was the first and only deal under discussion); just an NDA-cloaked back-room agreement. Aside from poorly serving the students, his notion of the University as an island, that serving the broader community is detrimental to serving the students, is fundamentally flawed. Serving the community and accepting the efforts of volunteers benefits both the students and the broader community.

Second, the process was terrible; instead of backing up and reaching out to the array of interested parties that a deal discussion might commence, he signed the non-disclosure agreement and completely shut out the faculty, students and community. Instead of embracing the stakeholders and providing some transparency, he went straight to the NDA and ambushed them.

And the third degree failure was the cowardly absence of recognition of the first two failures.

Father Privett claimed full responsibility, explained his rationale for what he did and the process he followed but his rationale for the process was weak. Before going under the cover of NDA, he should have reached out to the students, faculty and volunteers to say: before this goes away, give me some alternatives that will serve you better. Father Privett's gross incompetence was saddening, he should just resign. In the meantime, using another frequency as a fall back for a rejected FCC petition makes sense but there'll always be this sense of a vacated place in our hearts for 90.3 as San Francisco's cultural oasis.

I'm certainly hoping that KUSF can reemerge from the ashes. Please join the effort on Facebook to Save KUSF!

( Jan 20 2011, 10:27:58 AM PST ) Permalink

Sunday January 16, 2011

Sunday January 16, 2011

Managing Granular Access Controls for S3 Buckets with Boto

Storing backups is a long-standing S3 use case but, until the release of IAM the only way to use S3 for backups was to use the same credentials you use for everything else (launching EC2 instances, deploying artifacts from S3, etc). Now with IAM, we can create users with individual credentials, create user groups, create access policies and assign policies to users and groups. Here's how we can use boto to create granular access controls for S3 backups.

Storing backups is a long-standing S3 use case but, until the release of IAM the only way to use S3 for backups was to use the same credentials you use for everything else (launching EC2 instances, deploying artifacts from S3, etc). Now with IAM, we can create users with individual credentials, create user groups, create access policies and assign policies to users and groups. Here's how we can use boto to create granular access controls for S3 backups.

So let's create a user and a group within our AWS account to handle backups. Start by pulling and installing the latest boto release from github. Let's say you have an S3 bucket called "backup-bucket" and you want to have a user whose rights within your AWS infrastructure is confined to putting, getting and deleting backup files from that bucket. This is what you need to do:

- Create a connection to the AWS IAM service:

import boto iam = boto.connect_iam()

- Create a user that will be responsible for the backup storage. When the credentials are created, the access_key_id and access_key_id components of the response will be necessary for the user to use them, save those values:

backup_user = iam.create_user('backup_user') backup_credentials = iam.create_access_key('backup_user') print backup_credentials['create_access_key_response']['create_access_key_result']['access_key']['access_key_id'] print backup_credentials['create_access_key_response']['create_access_key_result']['access_key']['secret_access_key'] - Create a group that will be assigned permissions and put the user in that group:

iam.create_group('backup_group') iam.add_user_to_group('backup_group', 'backup_user') - Define a backup policy and assign it to the group:

backup_policy_json="""{ "Statement":[{ "Action":["s3:DeleteObject", "s3:GetObject", "s3:PutObject" ], "Effect":"Allow", "Resource":"arn:aws:s3:::backup-bucket/*" } ] }""" created_backup_policy_resp = iam.put_group_policy('backup_group', 'S3BackupPolicy', backup_policy_json)

Note: the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY environment variables need to be set or they need to be provided as arguments to the boto.connect_iam() call (which wraps the boto.iam.IAMConnection ctor).

Altogether Now:

import boto

iam = boto.connect_iam()

backup_user = iam.create_user('backup_user')

backup_credentials = iam.create_access_key('backup_user')

print backup_credentials['create_access_key_response']['create_access_key_result']['access_key']['access_key_id']

print backup_credentials['create_access_key_response']['create_access_key_result']['access_key']['secret_access_key']

iam.create_group('backup_group')

iam.add_user_to_group('backup_group', 'backup_user')

backup_policy_json="""{

"Statement":[{

"Action":["s3:DeleteObject",

"s3:GetObject",

"s3:PutObject"

],

"Effect":"Allow",

"Resource":"arn:aws:s3:::backup-bucket/*"

}

]

}"""

created_backup_policy_resp = iam.put_group_policy('backup_group', 'S3BackupPolicy', backup_policy_json)

While the command line tools for IAM are OK (Eric Hammond wrote a good post about them in Improving Security on EC2 With AWS Identity and Access Management (IAM)), I really prefer interacting with AWS programmatically over remembering all of the command line options for the tools that AWS distributes. I thought using boto and IAM was blog-worthy because I had a hard time discerning the correct policy JSON from the AWS docs. There's a vocabulary for the "Action" component and a format for the "Resource" component that wasn't readily apparent but after some digging around and trying some things, I arrived at the above policy incantation. Aside from my production server uses, I think IAM will make using 3rd party services that require AWS credentials to do desktop backups much more attractive; creating multiple AWS accounts and setting up cross-account permissions is a pain but handing over keys for all of your AWS resources to a third party is just untenable.

In the meantime, if you're using your master credentials for everything on AWS, stop. Adopt IAM instead! To read more about boto and IAM, check out

( Jan 16 2011, 03:09:15 PM PST ) Permalink

Sunday August 29, 2010

Sunday August 29, 2010

Scala Regular Expressions

![]() I've been using Scala for the past several weeks, so far it's been a win. My use of Scala to date has been as a "better Java." I'm peeling the onion layers of functional programming and Scala's APIs, gradually improving the code with richer Scala constructs. But in the meantime, I'm mostly employing plain old imperative programming. At this point, I think that's the best way to learn it. Trying to dive into the functional programming deep end can be a bit of a struggle. If you don't know Java, that may not mean much but for myself, I'd used Java extensively in years past and the project that I'm working on has a legacy code base in Java already. One of the Java annoyances that has plagued my work with in the past was the amount code required to work with regular expressions. I go back a long way with regular expressions, Perl (a good friend, long ago) supports it natively and the code I've written in recent years, mostly Python and Ruby, benefitted from the regular expression support in those languages.

I've been using Scala for the past several weeks, so far it's been a win. My use of Scala to date has been as a "better Java." I'm peeling the onion layers of functional programming and Scala's APIs, gradually improving the code with richer Scala constructs. But in the meantime, I'm mostly employing plain old imperative programming. At this point, I think that's the best way to learn it. Trying to dive into the functional programming deep end can be a bit of a struggle. If you don't know Java, that may not mean much but for myself, I'd used Java extensively in years past and the project that I'm working on has a legacy code base in Java already. One of the Java annoyances that has plagued my work with in the past was the amount code required to work with regular expressions. I go back a long way with regular expressions, Perl (a good friend, long ago) supports it natively and the code I've written in recent years, mostly Python and Ruby, benefitted from the regular expression support in those languages.

By annoyance, let's take an example that's simple in Perl since regexps are most succint in the language of the camel (and historically the state of Perl is given in a State of the Onion speech):

#!/usr/bin/env perl # re.pl $input = "camelCaseShouldBeUnderBar"; $input=~ s/([a-z])([A-Z])/$1 . "_" . lc($2)/ge; print "$input\n"; # outputs: camel_case_should_be_under_bar # now go the other way $input = "under_bar_should_be_camel_case"; $input=~ s/([a-z])_([a-z])/$1 . uc($2)/ge; print "$input\n"; # outputs underBarShouldCamelCaseWanna do the same thing in Java? Well, for simple stuff Java's Matcher has a replaceAll method that is, well, dumb as a door knob. If you want the replacement to be based on characters captured from the input and processed in some way, you'd pretty much have to do something like this:

import java.util.regex.Pattern;

import java.util.regex.Matcher;

public class Re {

Pattern underBarPattern;

Pattern camelCasePattern;

public Re() {

underBarPattern = Pattern.compile("([a-z])_([a-z])");

camelCasePattern = Pattern.compile("([a-z])([A-Z])");

}

public String camel(String input) {

StringBuffer result = new StringBuffer();

Matcher m = underBarPattern.matcher(input);

while (m.find()) {

m.appendReplacement(result, m.group(1) + m.group(2).toUpperCase());

}

m.appendTail(result);

return result.toString();

}

public String underBar(String input) {

StringBuffer result = new StringBuffer();

Matcher m = camelCasePattern.matcher(input);

while (m.find()) {

m.appendReplacement(result, m.group(1) + "_" + m.group(2).toLowerCase());

}

m.appendTail(result);

return result.toString();

}

public static void main(String[] args) throws Exception {

Re re = new Re();

System.out.println("camelCaseShouldBeUnderBar => " + re.underBar("camelCaseShouldBeUnderBar"));

System.out.println("under_bar_should_be_camel_case => " + re.camel("under_bar_should_be_camel_case"));

}

}

OK, that's way too much code. The reason why this is such a PITA in Java is that the replacement part can't be an anonymous function, or a function at all, due to the fact that... Java doesn't have them. Perhaps that'll change in Java 7. But it's not here today.

Anonymous functions (in the camel-speak of olde, we might've said "coderef") is one area where Scala is just plain better than Java. scala.util.matching.Regex has a replaceAllIn method that takes one as it's second argument. Furthermore, you can name the captured matches in the constructor. The anonymous function passed in can do stuff with the Match object passed in. So here's my Scala equivalent:

import scala.util.matching.Regex

val re = new Regex("([a-z])([A-Z])", "lc", "uc")

var output = re.replaceAllIn("camelCaseShouldBeUnderBar", m =>

m.group("lc") + "_" + m.group("uc").toLowerCase)

println(output)

val re = new Regex("([a-z])_([a-z])", "first", "second")

output = re.replaceAllIn("under_bar_should_be_camel_case", m =>

m.group("first") + m.group("second").toUpperCase)

println(output)

In both cases, we associate names to the capture groups in the Regex constructor. When the input matches, the resulting Match object makes the match data available to work on. In the first case

m.group("lc") + "_" + m.group("uc").toLowerCase

and in the second

m.group("first") + m.group("second").toUpperCase)

That's fairly succinct and certainly so much better than Java. By the way, if regular expressions are a mystery to you, get the Mastering Regular Expressions book. In the meantime, keep peeling the onion.

( Aug 29 2010, 06:40:32 PM PDT ) Permalink

Saturday July 24, 2010

Saturday July 24, 2010

Getting Started with a Scala 2.8 Toolchain

![]() With the release of version 2.8 and enthusiasm amongst my current project's collaborators, I've fired up my latent interest in scala. There's a lot to like about scala: function and object orientation, message based concurrency and JVM garbage collection, JITing, etc; it's a really interesting language. The initial creature comforts I've been looking for are a development, build and test environment I can be productive in. At present, it looks like the best tools for me to get rolling are IntelliJ, sbt and ScalaTest. In this post, I'll recount the setup I've arrived at on a MacBook Pro (Snow Leopard), so far so good.

With the release of version 2.8 and enthusiasm amongst my current project's collaborators, I've fired up my latent interest in scala. There's a lot to like about scala: function and object orientation, message based concurrency and JVM garbage collection, JITing, etc; it's a really interesting language. The initial creature comforts I've been looking for are a development, build and test environment I can be productive in. At present, it looks like the best tools for me to get rolling are IntelliJ, sbt and ScalaTest. In this post, I'll recount the setup I've arrived at on a MacBook Pro (Snow Leopard), so far so good.

Development:

I've used Eclipse for years and there's a lot I like about it but I've also had stability problems, particularly as plugins are added/removed from the installation (stay away from the Aptana ruby plugin, every attempt to use it results in an unstable Eclipse for me). So I got a new version of IntelliJ ("Community Edition"); the Scala plugin for v2.8 doesn't work with the IntelliJ v9.0.2 release so the next task was to grab and install a pre-release of v9.0.3 (see ideaIC-95.413.dmg). I downloaded recent build of the scala plugin (see scala-intellij-bin-0.3.1866.zip) and unzipped it in IntelliJ's plugins directory (in my case, "/Applications/IntelliJ IDEA 9.0.3 CE.app/plugins/"). I launched IntelliJ, Scala v2.8 support was enabled.

Build:

I've had love/hate relationships in the past with ant and maven; there's a lot to love for flexibility with the former and consistency with the latter (hat tip at "convention over configuration"). There's a lot to hate with the tedious XML maintenance that comes with both. So this go around, I'm kicking the tires on simple build tool. One of the really nice bits about sbt is that it has a shell you can run build commands in; instead of waiting for the (slow) JVM launch time everytime you build/test you can incur the launch penalty once to launch the shell and re-run those tasks from within the sbt shell. Once sbt is setup, a project can be started from the console like this:

$ sbt update

You'll go into a dialog like this:

Project does not exist, create new project? (y/N/s) y Name: scratch Organization: ohai Version [1.0]: 0.1 Scala version [2.7.7]: 2.8.0 sbt version [0.7.4]:Now I want sbt to not only bootstrap the build system but also bootstrap the IntelliJ project. There's an sbt plugin for that. Ironically, bringing up the develop project requires creating folder structures and code artifacts... which is what your development environment should be helping you with. While we're in there, we'll declare our dependency on the test framework we want (why ScalaTest isn't in the scala stdlib is mysterious to me; c'mon scala, it's 2010, python and ruby have both shipped with test support for years). So, fallback to console commands and vi (I don't do emacs) or lean on Textmate.

$

mkdir project/build$

vi project/build/Project.scala

import sbt._

class Project(info: ProjectInfo) extends DefaultProject(info) with IdeaProject {

// v1.2 is the current version compatible with scala 2.8

// see http://www.scalatest.org/download

val scalatest = "org.scalatest" % "scalatest" % "1.2" % "test->default"

}

$ mkdir -p project/plugins/src$

vi project/plugins/src/Plugins.scala

import sbt._

class Plugins(info: ProjectInfo) extends PluginDefinition(info) {

val repo = "GH-pages repo" at "http://mpeltonen.github.com/maven/"

val idea = "com.github.mpeltonen" % "sbt-idea-plugin" % "0.1-SNAPSHOT"

}

$ sbt update$

sbt ideaBack in IntelliJ, do "File" > "New Module" > select "Import existing module" and specify the path to the "scratch.iml" file that that last console command produced.

Note how we declared our dependency on the test library and the repository to get it from with two lines of code, not the several lines of XML that would be used for each in maven.

Test:

Back in IntelliJ, right click on src/main/scala and select "New" > "Package" and specify "ohai.scratch". Right click on that package and select "New" > "Scala Class", we'll create a class ohai.scratch.Bicycle - in the editor put something like

package ohai.scratch

class Bicycle {

var turns = 0

def pedal { turns +=1 }

}

Now to test our bicycle, do similar package and class creation steps in the src/test/scala folder to create ohai.scratch.test.BicycleTest:

package ohai.scratch.test

import org.scalatest.Spec

import ohai.scratch.Bicycle

class BicycleSpec extends Spec {

describe("A bicycle, prior to any pedaling -") {

val bike = new Bicycle()

it("pedal should not have turned") {

expect(0) { bike.turns }

}

}

describe("A bicycle") {

val bike = new Bicycle()

bike.pedal

it("after pedaling once, it should have one turn") {

expect(1) { bike.turns }

}

}

}

Back at your console, go into the sbt shell by typing "sbt" again. In the sbt shell, type "test". In the editor, make your bicycle do more things, write tests for those things (or reverse the order, TDD style) and type "test" again in the sbt shell. Lather, rinse, repeat, have fun.

( Jul 24 2010, 11:51:30 AM PDT ) Permalink

Comments [4]

Friday March 19, 2010

Friday March 19, 2010

Programmatic Elastic MapReduce with boto

I'm working on some cloud-homed data analysis infrastructure. I may focus in the future on using the Cloudera distribution on EC2 but for now, I've been experimenting with Elastic MapReduce (EMR). I think the main advantages of using EMR are:

I'm working on some cloud-homed data analysis infrastructure. I may focus in the future on using the Cloudera distribution on EC2 but for now, I've been experimenting with Elastic MapReduce (EMR). I think the main advantages of using EMR are:

- Configuring the namenode, tasktracker and jobtracker is tedious, EMR relieves you of those duties

- Instance pool setup/teardown is tightly integrated

- Automated pool member replacement if an instance goes down

- Built in verbs like the "aggregate" reducer

- Programmatic and GUI operation

While there's a slick EMR client tool implemented in ruby, I've got a workflow of data coming in/out of S3, I'm otherwise working in Python (using an old friend boto) and so I'd prefer to keep my toolchain in that orbit. The last release of boto (v1.9b) doesn't support EMR but lo-and-behold it's in HEAD in the source tree. So if you check it out the Google Code svn repo as well as set your AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY environment variables, you can programmatically run the EMR wordcount sample like this:

from time import sleep

from datetime import datetime

from boto.emr.step import StreamingStep

from boto.emr.connection import EmrConnection

job_ts = datetime.now().strftime("%Y%m%d%H%M%S")

emr = EmrConnection()

wc_step = StreamingStep('wc text', \

's3://elasticmapreduce/samples/wordcount/wordSplitter.py', \

'aggregate', input='s3://elasticmapreduce/samples/wordcount/input', \

output='s3://wc-test-bucket/output/%s' % job_ts)

jf_id = emr.run_jobflow('wc jobflow', 's3n://emr-debug/%s' % job_ts, \

steps=[wc_step])

while True:

jf = emr.describe_jobflow(jf_id)

print "[%s] %s" % (datetime.now().strftime("%Y-%m-%d %T"), jf.state)

if jf.state == 'COMPLETED':

break

sleep(10)

Have fun hadooping!

hadoop boto python aws elasticmapreduce s3 ec2

( Mar 19 2010, 11:48:51 AM PDT ) Permalink

Saturday January 23, 2010

Saturday January 23, 2010

2010: An Odyssey Continues

Hello, 2010. 2009 was a strange year, indeed. I quit my job after 5 years last spring, since then I've been working as a software/infrastructure consultant and playing with a lot of new technologies (of course also leveraging expertise I have with some more established ones). I've also done a lot of thinking. Thinking about cloud computing and compute marketplaces, about energy, mobile and transportation technologies, about life and the meaning of the whole thing. Consulting has had its ups and downs. Looking back at 2009 though, I can safely say I'm glad it's over. Not that it's all bad news, I have my share of things to be pleased about too. My daughter's Bat Mitzvah was beautiful. I've been spending more time with the kids, switched to decaf (I really enjoy the taste of coffee but excess caffeine isn't healthy) and was invited by my old friends in Metallica to some of their seminal events.

Hello, 2010. 2009 was a strange year, indeed. I quit my job after 5 years last spring, since then I've been working as a software/infrastructure consultant and playing with a lot of new technologies (of course also leveraging expertise I have with some more established ones). I've also done a lot of thinking. Thinking about cloud computing and compute marketplaces, about energy, mobile and transportation technologies, about life and the meaning of the whole thing. Consulting has had its ups and downs. Looking back at 2009 though, I can safely say I'm glad it's over. Not that it's all bad news, I have my share of things to be pleased about too. My daughter's Bat Mitzvah was beautiful. I've been spending more time with the kids, switched to decaf (I really enjoy the taste of coffee but excess caffeine isn't healthy) and was invited by my old friends in Metallica to some of their seminal events.

Nonetheless, 2010 will no doubt be an upgrade over 2009.

One of the things I'm spending more domestic time around is Odyssey of the Mind, a creative problem solving competition that my son is participating in. My daughter did it previously and went to the world finals with her team twice, my son went last year, and (warning: proud papa bragging alert) placed 4th! This year the coaching torch is passed to me and I'm working with the team facilitating their solution for the long term problem which will be presented at the regional competition next month. We're hoping for a set of repeat victories that will send us to the world finals again this year. I'm new to coaching OotM but my co-coach has coached before and I've helped the teams in supporting roles in years past, so the Odyssey regime is not completely new to us.

This week will present some interesting challenges. My co-coach is physician and will be flying into Haiti to join the relief effort; I'm really happy for her to have this opportunity to be of service. I'll do my best to keep the OotM team moving forward until she returns.

Work will pick up too. Consulting has given me an opportunity to learn Ruby, Rails and a lot of stuff in that technology orbit. I've also been putting Amazon Web Services to heavy use and playing around with Twitter's APIs. I've been looking for opportunities to scratch some Hadoop itches and lately my interests have turned towards programming in Scala, I expect some consulting gigs to shake loose to sate those interests. If the opportunity is right, my entrepreneurial impulses will get the best of me and I'll stop (or scale back) consulting to jump a on new start-up. My desire to set the world on fire will never be satisfied.

Yea, 2010 is gonna be good. Check it out, the Giants have made some changes that look like a credible offense. Already, it's gotta be better than 2009.

( Jan 23 2010, 10:02:26 PM PST ) PermalinkComments [1]

Thursday December 03, 2009

Thursday December 03, 2009

Scaling Rails with MySQL table partitioning

Often the first step in scaling MySQL back-ended web applications is integrating a caching layer to reduce the frequency of expensive database queries. Another common pattern is to denormalize the data and index to optimize read response times. Denormalized data sets solve the problem to a point, when the indexes exceed reasonable RAM capacities (which is easy to do with high data volumes) these solutions degrade. A general pattern for real time web applications is the emphasis on recent data, so keeping the hot recent data separate from the colder, larger data set is fairly common. However, purging denormalized data records that have aged beyond usefulness can be expensive. Databases will typically lock records up, slow or block queries and fragment the on-disk data images. To make matters worse, MySQL will block queries while the data is de-fragmented.

Often the first step in scaling MySQL back-ended web applications is integrating a caching layer to reduce the frequency of expensive database queries. Another common pattern is to denormalize the data and index to optimize read response times. Denormalized data sets solve the problem to a point, when the indexes exceed reasonable RAM capacities (which is easy to do with high data volumes) these solutions degrade. A general pattern for real time web applications is the emphasis on recent data, so keeping the hot recent data separate from the colder, larger data set is fairly common. However, purging denormalized data records that have aged beyond usefulness can be expensive. Databases will typically lock records up, slow or block queries and fragment the on-disk data images. To make matters worse, MySQL will block queries while the data is de-fragmented.

MySQL 5.1 introduced table partitioning as a technique to cleanly prune data sets. Instead of purging old data and the service interruptions that a DELETE operation entails, you can break up the data into partitions and drop the old partitions as their usefulness expires. Furthermore, your query response times can benefit from knowing about how the partitions are organized; by qualifying your queries correctly you can limit which partitions get accessed.

But MySQL's partition has some limitations that may be of concern:

- The partitioning criteria must be an integer value. It's easy to express date information as integer values for the purposes of partitioning and in fact you can (albeit intrusively) partition with non integer values using triggers. But the underlying partitioning that MySQL implemented mandates integer criteria.

- All columns used in the partitioning expression for a partitioned table must be part of every unique key that the table may have. This will impact your design as to your use of primary keys and unique keys.

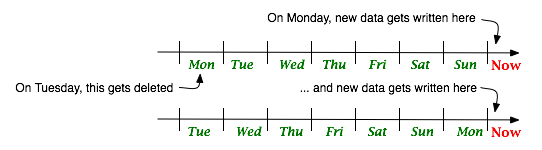

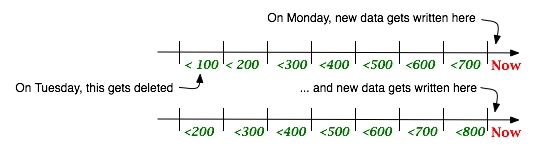

When we first looked at MySQL's table partitioning, this seemed like a deal breaker. The platform we've been working with was already on MySQL 5.1 but it was also a rails app. Rails has its own ORM semantics that uses integer primary keys. What we wanted were partitions like this:

Here, data is segmented into daily buckets and as time advances, fresh buckets are created while old ones are discarded. Yes, with some social web applications, a week is the boundary of usefulness that's why there are only 8 buckets in this illustration. New data would be written to the "Now" partition, yesterday's data would be in yesterday's bucket and last Monday's data would be in the "Monday" bucket. I initially thought that the constraints on MySQL's partitioning criteria might get in the way; I really wanted the data partitioned by time but needed to partition by the primary keys (sequential integers) that rails used like this:

In this case, data is segmented by a ceiling on the values allowed in each bucket. The data with ID values less than 100 go in the first bucket, less than 200 in the second and so on.

Consider an example, suppose you had a table like this:

CREATE TABLE tweets ( id bigint(20) unsigned NOT NULL AUTO_INCREMENT, status_id bigint(20) unsigned NOT NULL, user_id int(11) NOT NULL, status varchar(140) NOT NULL, source varchar(24) NOT NULL, in_reply_to_status_id bigint(20) unsigned DEFAULT NULL, in_reply_to_user_id int(11) DEFAULT NULL, created_at datetime NOT NULL, PRIMARY KEY (id), KEY created_at (created_at), KEY user_id (user_id) );You might want to partition on the "created_at" time but that would require having the "created_at" field in the primary key, which doesn't make any sense. I worked around this by leveraging the common characteristic of rails' "created_at" timestamp and integer primary key "id" fields: they're both monotonically ascending. What I needed was to map ID ranges to time frames. Suppose we know, per the illustration above, that we're only going to have 100 tweets a day (yes, an inconsequential data volume that wouldn't require partitioning, but for illustrative purposes), we'd change my table like this:

ALTER TABLE tweets PARTITION BY RANGE (id) ( PARTITION tweets_0 VALUES LESS THAN (100), PARTITION tweets_1 VALUES LESS THAN (200), PARTITION tweets_2 VALUES LESS THAN (300), PARTITION tweets_3 VALUES LESS THAN (400), PARTITION tweets_4 VALUES LESS THAN (500), PARTITION tweets_5 VALUES LESS THAN (600), PARTITION tweets_6 VALUES LESS THAN (700), PARTITION tweets_maxvalue VALUES LESS THAN MAXVALUE );When the day advances, it's time create a new partition and drop the old one, so we do this:

ALTER TABLE tweets REORGANIZE PARTITION tweets_maxvalue into ( PARTITION tweets_7 VALUES LESS THAN (800), PARTITION tweets_maxvalue VALUES LESS THAN MAXVALUE ); ALTER TABLE tweets DROP PARTITION tweets_0;Programmatically determining what the new partition name should be (tweets_7) requires introspecting on the table itself. Fortunately, MySQL has metadata about tables and partitions in its "information_schema" database. We can query it like this

SELECT partition_ordinal_position AS seq, partition_name AS part_name, partition_description AS max_id FROM information_schema.partitions WHERE table_name='tweets' and table_schema='twitterdb' ORDER BY seq

So that's the essence of the table partition life-cycle. One issue here is that when we reorganize the partition, it completely rewrites it; it doesn't atomically rename "tweets_maxvalue" to "tweets_7" and allocate a new empty "tweets_maxvalue" (that would be nice). If the partition is populated with data, the rewrite is resource consumptive; the more data, the more so. The availability of a table that has a moderate throughput of data (let's say 1 million records daily, not 100) would suffer, locking the table for a long duration waiting for the rewrite is unacceptable for time sensitive applications. Given that issue, what we really want is to anticipate the ranges and reorganize the MAXVALUE partition in advance. When we allocate the new partition, we set an upper boundary on the ID values for but we need to make a prediction as to what the upper boundary will be. Fortunately, we put an index on created_at so we can introspect on the data to determine the recent ID consumption rate like this:

SELECT UNIX_TIMESTAMP(created_at),MAX(id) FROM tweets WHERE DATE(created_at)=DATE( now() - interval 1 day ); SELECT UNIX_TIMESTAMP(created_at),MAX(id) FROM tweets WHERE DATE(created_at)=DATE( now() - interval 2 day ); SELECT UNIX_TIMESTAMP(created_at),MAX(id) FROM tweets WHERE DATE(created_at)=DATE( now() - interval 3 day ); SELECT UNIX_TIMESTAMP(created_at),MAX(id) FROM tweets WHERE DATE(created_at)=DATE( now() - interval 4 day );The timestamps (epoch seconds) and ID deltas give us what we need to determine the ID consumption rate N; the new range to allocate is simply max(id) + N.

OK, so where's the rails? All of this so far has been transparent to rails. To take advantage of the partition access optimization opportunities mentioned above, it's useful to have application level knowledge of the time frame to ID range mappings. Let's say we just want recent tweets from a user M and we know that the partition boundary from a day ago is N, we can say

Tweet.find(:all, :conditions => ["user_id = M AND id > N"])This will perform better than the conventional (even if we created an index on user_id and created_at).

Tweet.find(:all, :conditions => ["user_id = M AND created_at > now() - interval 1 day"])Scoping the query to a partition in this way isn't unique to rails (or even to MySQL, with Oracle we can explicitly name the partitions we want a query to hit in SQL). Putting an id range qualifier in the WHERE clause of our SQL statements will work with any application environment. I chose to focus on rails here because of the integer primary keys that rails requires and the challenge that poses. To really integrate this into your rails app, you might create a model that has the partition metadata.

I hope this is helpful to others who are solving real time data challenges with MySQL (with or without rails), I didn't turn up much about how folks manage table partitions when I searched for it. There's an interesting article about using the MySQL scheduler and stored procedures to manage partitions but I found the complexity of developing, testing and deploying code inside MySQL more of a burden than I wanted to carry, so I opted to do it all in ruby and integrate it with the rails app. If readers have any better techniques for managing MySQL table partitions, please post about it!

Table partitioning handles a particular type of data management problem but it won't answer all of our high volume write capacity challenges. Scaling write capacity requires distributing the writes across independent indexes, sharding is the common technique for that. I'm currently investigating HBase, which transparently distributes writes to Hadoop data nodes, possibly in conjunction with an external index (solr or lucene), as an alternative to sharding MySQL. Hadoop is sufficiently scale free for very large workloads but real time data systems has not been its forte. Perhaps that will be a follow up post.

Further Reading:

Comments [1]

Thursday September 10, 2009

Thursday September 10, 2009

Hub-a-dub-bub, feed clouds in a tub

While at Technorati, I observed a distinct shift around summer of 2005 in the flow of pings from largely worthwhile pings to increasingly worthless ones. A ping is a simple message with a URL. Invented by Dave Winer, it was originally implemented with XML-RPC but RESTful variants also emerged. The intention of the message is "this URL updated." For a blog, the URL is the main page of the blog; it's not the feed URL, a post URL ("permalink") or any other resource on the site - it's just the main page. Immediately, that narrow scope has a problem; as a service that should do something with the ping, the notification has no more information about what changed on the site. A new post? Multiple new posts? Um, what are the new post URL(s)? Did the blogroll change? And so on. Content fetching and analysis is cheap; network latencies, parsing content and doing interesting stuff with it is easy at low volumes but is difficult to scale.

While at Technorati, I observed a distinct shift around summer of 2005 in the flow of pings from largely worthwhile pings to increasingly worthless ones. A ping is a simple message with a URL. Invented by Dave Winer, it was originally implemented with XML-RPC but RESTful variants also emerged. The intention of the message is "this URL updated." For a blog, the URL is the main page of the blog; it's not the feed URL, a post URL ("permalink") or any other resource on the site - it's just the main page. Immediately, that narrow scope has a problem; as a service that should do something with the ping, the notification has no more information about what changed on the site. A new post? Multiple new posts? Um, what are the new post URL(s)? Did the blogroll change? And so on. Content fetching and analysis is cheap; network latencies, parsing content and doing interesting stuff with it is easy at low volumes but is difficult to scale.

In essence, a ping is a cheap message to prompt an expensive analysis to detect change. It's an imbalanced market of costs and benefits. Furthermore, even if the ping had a richer payload of information, pings lack another very important component: authenticity. These cheap messages can be produced by anybody for any URL and the net result I observed was that lots of people produced pings for lots of things, most of which weren't representative of real blogs (other types of sites or just spam) or real changes on blogs, just worthless events that were resource intensive to operate on. When I left Technorati in March (2009), we were getting around 10 million pings a day, roughly 95% of them of no value. Pings are the SMTP of the blogosphere; weak identity systems, spammy and difficult to manage at scale.

Besides passively waiting for pings, the other method to find things that have changed is to poll sites. But polling is terribly inefficient. To address those inefficiencies, FriendFeed implemented Simple Update Protocol. SUP is reminiscent of another Dave Winer invention, changes.xml however SUP accounts for discovery and offers a more compact format.

But SUP wasn't the first effort to address the aforementioned deficiencies, 2005 saw a lot of activity around something called "feedmesh." Unfortunately, the activity degenerated into a lot of babble; noise I attribute to unclear objectives and ego battles but I'm sure others have their own interpretation. The feedmesh discussion petered out and little value beyond ping-o-matic and other ping relayers emerged from it. Shortly thereafter SixApart created their atom stream, I think spearheaded by Brad Fitzpatrick. The atom stream is essentially a long lived HTTP connection that streams atom elements to the client. The content flow was limited to SixApart's hosted publishing platforms (TypePad, LiveJournal and Vox) and the reliability wasn't that great, at least in the early days, but it was a big step in the right direction for the blog ecosystem. The atom stream was by far the most efficient way to propogate content from the publishing platform to interested parties such as Technorati operating search, aggregation and analytic systems. It eliminates the heavyweight chatter of cheap ping messages and the heavyweight process that follows: fetch page, discover feed, fetch feed, inspect contents and do stuff with it.

So here we are in 2009 and it feels like deja-vu all over again. This time Dave is promoting rssCloud. rssCloud does a waltz of change notification; the publisher notifies a hub, the hub broadcasts notifications to event subscribers and the subscriber does the same old content fetch and analysis cycle above. rssCloud seems fundamentally flawed in that it is dependent on IP addresses as identifiers. Notification receivers who must use new a IP address must re-subscribe. I'm not sure how an aggregator should distribute notificaton handling load across a pool of IP addresses. The assumption is that notification receivers will have stable, singular IP addresses; rssCloud appears scale limited and a support burden. The focus on specification simplicity has its merits, I think we all hate gratuitous complexity (observe the success of JSON over SOAP). However, Dave doesn't regard system operations as an important concern; he'll readily evangelize a message format and protocol but the real world operability is Other Peoples Problem.

Pubsubhubub (hey, Brad again) has a similar waltz but eliminates the content fetch and analysis cycle ergo it's fundamentally more efficient than rssCloud's waltz. Roughly, Pubsubhubub is to the SixApart atom stream what rssCloud is to old school XML-RPC ping. If I were still at Technorati (or working on event clients like Seesmic, Tweetdeck, etc), I would be implementing Pubsubhubbub and taking a pass on rssCloud. With both systems, I'd be concerned with a few issues. How can the authenticity of the event be trusted? Yes, we all like simplicity but looking at SMTP is apt; now mail systems must be aware of SPF, DKIM, SIDF, blahty blah blah. Its common for mail clients to access MTA's over cryptographically secure connections. Mail is a mess but it works, albeit with a bunch of junk overlaid. I guess this is why Wave Protocol has gathered interest. Anyway, Pubsubhubbub has a handshake during the subscription processes, though that looks like a malicious party could still spin up endpoints with bogus POSTs. I'd like to see an OAuth or digest authentication layer in the ecosystem. Yea, it's a little more complicated, but nothing onerous... suck it up. Whatever. Brad knows the authenticity rap, I mean he also invented OpenID (BTW, we adopted OpenID at Technorati back in 2007). At Technorati we had to implement ping throttling to combat extraneous ping bots; either daemons or cron jobs that just ping continuously, whether the URL has changed or not. You can't just blacklist that URL, we don't know it was the author generating those pings, there's no identity authenticity there. We resorted to blocking IP addresses but that scales poorly and creates other support problems. We had 1000's of domain names blocked and whole class C networks blocked but it was always a wackamole exercise; so much for an open blogosphere, a whitelist is the only thing that scales within those constraints. Meanwhile, back to rssCloud and Pubsubhubbub, what event delivery guarantees can be made? If a data center issue or something else interrupts event flow, are events spooled so that event consumption can be caught up? How can subscribers track the authenticity of the event originators? How can publishers keep track of who their subscribers are? Well, I'm not at Technorati anymore so I'm no longer losing any sleep over these kinds of concerns but do I care about the ecosystem. For more, Matt Mastracci is posting interesting stuff about Pubsubhubbub and a sound comparison was posted to Techcrunch yesterday.

( Sep 10 2009, 12:35:37 PM PDT ) Permalink

Sunday August 02, 2009

Sunday August 02, 2009

Business Models in Consulting, Contracting and Training Since leaving Technorati last spring, I've been working independently with a few entrepreneurs on their technical platforms. This has mostly entailed working with tools around Infrastructure-As-A-Service (AWS), configuration management (Chef and RightScale), search (Solr) and learning a lot about programming and going into production with ruby and rails. I've spoken to a number of friends and acquaintances who are working as consultants, contractors, technical authors and trainers. Some are working as lone-wolves and others working within or have founded larger organizations. I'm always sniffing for where the upside opportunities are and the question that comes to my mind is: how do such businesses scale?

A number of technology services companies that I've taken notice of have been funded in the last year or so including OpsCode, Reductive Labs, Cloudera and Lucid Imagination. I think all of these guys are in great positions; virtual infrastructure (which is peanut butter to the chocolate of IaaS), big data and information retrieval technologies provide the primordial goo that will support new mobile, real time and social software applications. They are all working in rapid innovation spaces that hold high value potentials but also new learning and implementation challenges that rarefy specialized knowledge.

Years ago when I was working with Covalent Technologies, we tried to build a business around "enterprise open source" with Apache, Tomcat, etc as the basis. Packaging and selling free software is difficult. On the one hand, offering a proven technology stack configuration to overcome the usual integration and deployment challenges as well as providing a support resource is really valuable to Fortune 500's and such. However, my experience there and observations of what's happened with similar businesses (such as Linuxcare and SpikeSource) has left me skeptical how big the opportunity is. After all, while you're competing with the closed-source proprietary software vendors, you're also competing with Free.

The trend I'm noticing is the branching out away from the packaging and phone support and into curriculum. Considering that most institutional software technology education, CS degrees, extended programs, etc have curricula that are perpetually behind the times, it makes sense that the people who possess specialized knowledge on the bleeding edge lead the educational charge. Lucid Imagination, Cloudera and Scale Unlimited are illustrating this point. While on-premise training can be lucrative, I think online courseware may provide a good answer to the business scale question.

For myself, I'm working with and acquiring knowledge in these areas tactically. Whatever my next startup will be, it should be world-changing and lucrative. And I'll likely be using all of these technologies. Thank goodness these guys are training the workforce of tomorrow!

startups scalability entrepreneuring

( Aug 02 2009, 08:57:08 PM PDT ) Permalink

Thursday May 21, 2009

Thursday May 21, 2009

Configuring Web Clouds with Chef

![]() I'm not generally passionate about network and system operations, I prefer to focus my attention and creativity on system and software architectures. However, infrastructure provisioning, application deployment, monitoring and maintenance are facts of life for online services. When those basic functions aren't functioning well, then I get passionate about them. When service continuity is impacted and operations staff are overworked, it really bothers me; it tells me that I or other developers I'm working with are doing a poor job of delivering resilient software. I've had many conversations with folks who've accepted as a given that development teams and operations teams have friction between them; some even suggest that they should. After all, so goes that line of thinking, the developers are graded on how rapidly they implement features and fix bugs whereas the operators are graded on service availability and performance. Well, you can sell that all you want but I won't buy it.

I'm not generally passionate about network and system operations, I prefer to focus my attention and creativity on system and software architectures. However, infrastructure provisioning, application deployment, monitoring and maintenance are facts of life for online services. When those basic functions aren't functioning well, then I get passionate about them. When service continuity is impacted and operations staff are overworked, it really bothers me; it tells me that I or other developers I'm working with are doing a poor job of delivering resilient software. I've had many conversations with folks who've accepted as a given that development teams and operations teams have friction between them; some even suggest that they should. After all, so goes that line of thinking, the developers are graded on how rapidly they implement features and fix bugs whereas the operators are graded on service availability and performance. Well, you can sell that all you want but I won't buy it.

In my view, developers need to deliver software that can be operated smoothly and operators need to provide feedback on how smoothly the software is operating; dev and ops must collaborate. I accept as a given that developers

- Use source control

- Write unit tests (after the fact or before/during TDD style)

- Write functional and integration tests

- Maintain a build system for running test harnesses and packaging code

- Document internal architecture and operating interfaces

- Plan for change with respect to scale charactistics and functionality

- Use configuration management

- Automate infrastructure provisioning, code deployment and rollback

- Monitor infrastructure and application metrics

So I've been giving Chef a test-drive for this infrastructure-on-EC2 management project that's been cooking. The system implemented the following use cases:

- Launch web app servers on EC2 with Apache, Passenger, RoR (+other gems) and overlay a set of rails apps out of git

- Launch a pair of reverse proxies (with ha-proxy) in front of the app servers - and reconfigure them when the set of app servers is expanded or contracted

- Configure the proxy for failover with heartbeat

- Add new rails apps to the set of app servers

- Updating/rolling back rails apps

There's a lot of energy in the Chef community (check out Casserole), combined with monitoring, log management and cloud technologies, I think there's a lot of IT streamlining ahead. Perhaps the old days of labor and communication intensive operations will give way to a new era of autonomic computing. I'll post further about some of the mechanics of working with ruby, rails, chef, EC2, chef-deploy and other tools in the weeks ahead (particularly now that EC2 has native load balancing, monitoring and auto-scaling capabilities). I'll also talk a bit about this stuff at a Velocity BoF. If you're thinking about attending Velocity, O'Reilly is offering 30% off to the first 30 people to register today with the code vel09d30 today (no I'm not getting any kinduva kickback from O'Reilly). And you can catch Infrastructure in the Cloud Era with Adam Jacob (Opscode), Ezra Zygmuntowicz (EngineYard) to learn more about Chef and cloud management.

chef puppet cfengine ec2 aws ruby cloud computing velocity

( May 21 2009, 12:30:07 PM PDT ) PermalinkComments [2]

Tuesday March 31, 2009

Tuesday March 31, 2009

Cloning VMware Machines

I bought a copy of VMware Fusion on special from Smith Micro (icing on the cake: they had a 40% off special that week) specifically so I could simulate a network of machines on my local MacBook Pro. While I've heard good things about Virtual Box, one of the other key capabilities I was looking for from MacIntosh virtualization software was the ability convert an existing Windows installation to a virtual machine. VMware reportedly has the best tools for that kind of thing. I have an aging Dell with an old XP that I'd like to preserve when I finally decide to get rid of the hardware; when it's time to Macify, I'll be good to go.

I bought a copy of VMware Fusion on special from Smith Micro (icing on the cake: they had a 40% off special that week) specifically so I could simulate a network of machines on my local MacBook Pro. While I've heard good things about Virtual Box, one of the other key capabilities I was looking for from MacIntosh virtualization software was the ability convert an existing Windows installation to a virtual machine. VMware reportedly has the best tools for that kind of thing. I have an aging Dell with an old XP that I'd like to preserve when I finally decide to get rid of the hardware; when it's time to Macify, I'll be good to go.

I started building my virtual network very simply, by creating a CentOS VM. Once I had my first VM running, I figured I could just grow the network from there; I was expecting to find a "clone" item in the Fusion menus but alas, no joy. So, it's time to hack. Looking around at the artifacts that Fusion created, a bunch of files in a directory named for the VM, I started off by copying the directory, the files it contained that had the virtual machine name as components of the file name and edited the metadata files ({vm name}.vmdk/.vmx/.vmxf). Telling Fusion to launch that machine, it prompted if this was a copy or a moved VM - I told it that it was copied and the launch continued. Both launched VM's could ping each other so voila: my virtual network came into existence.

I've since found another procedure to create "linked clones" in VMware Fusion. It looks like this will be really useful for my next scenario of having two different flavors of VM's running on my virtual network. The setup I want to get to is one where I can have "manager" host (to run provisioning, monitoring and other management applications) and cookie-cutter "worker" hosts (webservers, databases, etc). Ultimately, this setup will help me tool up for cloud platform operations; I have more Evil Plans there.

So all of this has me wondering: why doesn't VMware support this natively? Where's that menu option I was looking for? Is there an alternative to this hackery that I just overlooked?

vmware virtualization centos vmware fusion vm cloning

( Mar 31 2009, 09:12:22 AM PDT ) PermalinkComments [2]

Thursday March 12, 2009

Thursday March 12, 2009

Going to Metallica's Rock and Roll Hall of Fame Induction

Those 25 things you should know about me memes circulating rarely interest me (honestly, I don't care that you have a collection of rare El Salvadoran currency). However, one thing that my friends know but regular readers may not is that I have a fairly eclectic background. Did you know that I used to hang around the art department's hot glass studio in college to blow glass? Did you know that I learned to program in Pascal when I was in college and hated it? Yea, yea, I don't care much anymore either. But anyway, back in the 80's I was friends with this Danish dude from LA who shared my interest in the underground heavy metal scene that was burgeoning, particularly in Britain ("New Wave of British Heavy Metal" AKA NWOBHM) and Europe. We used to trade records and demos (the first Def Leppard 3 song EP on 9" vinyl, I was tired of it so I traded him for a bunch of Tygers of Pan Tang and other crap I didn't own already). I think he, like myself, used to pick up copies of Melody Maker and Sounds at the local record store to read about what was going on overseas. Eventually, Kerrang! came out providing fuller coverage of the metal scene, complete with glossy pictures. But in the meantime back in San Francisco, I helped a friend of mine (Ron Quintana) operate his fanzine Metal Mania (don't be confused, the name was re-appropriated by various larger publishing concerns at different times in the years since but none of them had any relationship to the original gangstas).

Those 25 things you should know about me memes circulating rarely interest me (honestly, I don't care that you have a collection of rare El Salvadoran currency). However, one thing that my friends know but regular readers may not is that I have a fairly eclectic background. Did you know that I used to hang around the art department's hot glass studio in college to blow glass? Did you know that I learned to program in Pascal when I was in college and hated it? Yea, yea, I don't care much anymore either. But anyway, back in the 80's I was friends with this Danish dude from LA who shared my interest in the underground heavy metal scene that was burgeoning, particularly in Britain ("New Wave of British Heavy Metal" AKA NWOBHM) and Europe. We used to trade records and demos (the first Def Leppard 3 song EP on 9" vinyl, I was tired of it so I traded him for a bunch of Tygers of Pan Tang and other crap I didn't own already). I think he, like myself, used to pick up copies of Melody Maker and Sounds at the local record store to read about what was going on overseas. Eventually, Kerrang! came out providing fuller coverage of the metal scene, complete with glossy pictures. But in the meantime back in San Francisco, I helped a friend of mine (Ron Quintana) operate his fanzine Metal Mania (don't be confused, the name was re-appropriated by various larger publishing concerns at different times in the years since but none of them had any relationship to the original gangstas).

Back in the day, Howie Klein was a muckety muck in the music industry, haunting the local clubs like The Old Waldorf and Mabuhay Gardens. Howie hooked us up with a show on KUSF. I dubbed the show Rampage Radio, it ran in the wee hours every Saturday night (right after Big Rick Stuart finished up his late night reggae show with those dudes from Green Apple Records on Clement Street). In between hurling insults at "album oriented rock" and big-hair metal bands (posers!), we played a lot of stuff you couldn't hear anywhere else. Among the many obscure noises we aired were demos from East Bay metalheads Exodus. Amazingly, Rampage Radio is still on the air. Well, that Danish kid and one of the guys I befriended from Exodus were Lars Ulrich and Kirk Hammett, respectively. In short order, they would be playing together in a band Lars named Metallica (after haggling with Ron about not taking that name for the 'zine).

I eventually lost interest in the metal scene (not enough innovation, too much sound-alike derivatives to keep me listening); even though the music from then is still on my playlist, my repertoire has broadened widely (talk to me about gypsy style string jazz, please). I've been peripherally in touch with friends from back then. Over the years, I'd go to a few Metallica shows but the guys are always mobbed at the backstage parties, there's not much of an opportunity to actually talk about anything. Anyway, we have little in common now. I develop software and crazy assed online services; they tour the world to perform in front of throngs. And I don't drink Jaegermeister anymore. In 2000, I introduced one of the friends I've stayed in touch with, Brian Lew, who also had a fanzine Back In The Day, to editors at salon.com (where I was working at the time). He contributed a great article expressing a sentiment that I shared, dismay at Metallica's war on Napster. I don't think I've actually talked to Lars in 15 years. After seeing news coverage of him ranting about how people (his most valued asset: his fans) where ripping him off, I'm not sure I wanted to. But I think we're all over that now, let's just play Rock Band and fuhgedaboutit.

So here we are decades later and Metallica hasn't just warped the music industry, they are the industry. They're up there with Elvis and the Beatles and all of that (except, barring Cliff Burton, they're not dead). Last week, Brian pings me that Q-Prime (Metallica's management company in New York) is trying to reach me. After a few phone calls, it turns out that Metallica is honoring a handful of us old-schoolers by inviting us to a big shindig in Cleveland for their Rock and Roll Hall of Fame induction next month. How cool is that?! I'm still kind blown away that this is really happening (am I being punkd??).

So, I may be leaving Technorati but I'm going to the Rock and Roll Hall of Fame! w00t! That tune keeps humming through my conscience, "...living in sin with a safety pin, Cleveland rocks! Cleveland rocks!" but the way it sounds in my head, it's ganked up, roaring from a massive PA and a wall of Marshall stacks. So now you know what my plans will be in a few weeks and now you've learned a dozen or so things about me (if not 25) that you may not have known before.

metallica rock and roll hall of fame metal kusf cleveland nwobhm

( Mar 12 2009, 12:22:51 PM PDT ) Permalink

Tuesday March 10, 2009

Tuesday March 10, 2009

More Changes At Technorati (this time, it's personal)

![]() My post last week focused on some of the technology changes that I've been spearheading at Technorati but this time, I have a personal change to discuss. When I joined Technorati in 2004, the old world of the web was in shambles. The 1990's banner-ads-on-a-CPM-basis businesses were collapsed. The editorial teams using big workflow-oriented content management system (CMS) infrastructure (which I worked on in the 90's) were increasingly eclipsed by the ecosystem of blogs. Web 2.0 wasn't yet the word on everyone's lips. But five years ago, Dave Sifry's infectious vision for providing "connective tissue" for the blog ecosystem, tapping the attention signals and creating an emergent distributed meta-CMS helped put it there. Being of service to bloggers just sounded too good, so I jumped aboard.

My post last week focused on some of the technology changes that I've been spearheading at Technorati but this time, I have a personal change to discuss. When I joined Technorati in 2004, the old world of the web was in shambles. The 1990's banner-ads-on-a-CPM-basis businesses were collapsed. The editorial teams using big workflow-oriented content management system (CMS) infrastructure (which I worked on in the 90's) were increasingly eclipsed by the ecosystem of blogs. Web 2.0 wasn't yet the word on everyone's lips. But five years ago, Dave Sifry's infectious vision for providing "connective tissue" for the blog ecosystem, tapping the attention signals and creating an emergent distributed meta-CMS helped put it there. Being of service to bloggers just sounded too good, so I jumped aboard.

Through many iterations of blogospheric expansion, building data flow, search and discovery applications, dealing with data center outages (and migrations) and other adventures, it's been a long strange trip. I've made a lot of fantastic friends, contributed a lot of insight and determination and learned a great deal along the way. I am incredibly proud of what we've built over the last five years. However today it's time for me to move on, my last day at Technorati will be next week.

Technorati has a lot of great people, technology and possibilities. The aforementioned crawler rollout provides the technology platform with a better foundation that I'm sure Dorion and the rest of the team will build great things on. The ad platform will create an abundance of valuable opportunities for bloggers and other social media. I know from past experiences what a successful media business looks like and under Richard Jalichandra's leadership, I see all of the right things happening. The ad platform will leverage Technorati's social media data assets with the publisher and advertiser tools that will make Technorati an ad delivery powerhouse. I'm going to remain a friend of the company's and do what I can to help its continued success, but I will be doing so from elsewhere.

I want to take a moment to thank all of my colleagues, past and present, who have worked with me to get Technorati this far. The brainstorms, the hard work, the arguments and the epiphanies have been tremendously valuable to me. Thank You!

I'm not sure what's next for me. I feel strongly that the changes afoot in cloud infrastructure, open source data analytics, real time data stream technologies, location based services (specifically, GPS ubiquity) and improved mobile devices are going to build on Web 2.0. These social and technology shifts will provide primordial goo out of which new innovations will spring. And I intend to build some of them, so brace yourself for Web 3.0. It's times like these when the economy is athrash that the best opportunities emerge and running for cover isn't my style. The next few years will see incumbent players in inefficient markets crumble and more powerful paradigms take their place. I'm bringing my hammer.

( Mar 10 2009, 02:06:20 PM PDT ) PermalinkComments [4]

Wednesday March 04, 2009

Wednesday March 04, 2009

Welcome to the Technorati Top 100, Mr. President

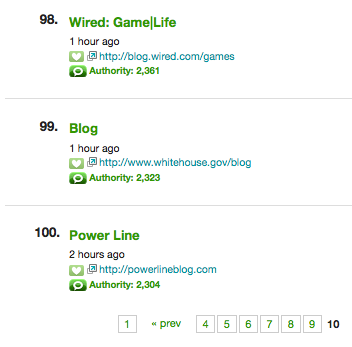

Since its inception just 6 weeks ago, the White House Blog has had a tremendous center of gravity. I noted the volume of links coming in to the White House Blog after the first week. This is an existential moment for the blogosphere because today the White House Blog has 3216 links from 2323 blogs. And so it's official: the White House Blog has reached the Technorati Top 100.

Since its inception just 6 weeks ago, the White House Blog has had a tremendous center of gravity. I noted the volume of links coming in to the White House Blog after the first week. This is an existential moment for the blogosphere because today the White House Blog has 3216 links from 2323 blogs. And so it's official: the White House Blog has reached the Technorati Top 100.

I find myself reflecting on what the top 100 looked like four years ago, after the prior presidential inauguration, and what it looks like today; the blogosphere is a very different place. Further down memory lane, who recalls when Dave Winer and Instapundit were among the top blogs? Yep, most of the small publishers have been displaced by those with big businesses behind them. Well, at least BoingBoing endures but Huffpo and Gizmo better watch out, here comes Prezbo.

technorati white house inauguration blog

( Mar 04 2009, 10:59:16 PM PST ) Permalink

![Validate my RSS feed [Valid RSS]](/images/valid-rss.png)